This new iteration introduces a host of improvements and features aimed at enhancing the model's performance, image quality, and versatility in handling complex prompts.

Key Features and Innovations

New Architecture and Enhanced Performance

Stable Diffusion 3 is built on a novel diffusion transformer architecture, which represents a departure from the architectures of previous versions. This new foundation allows for more efficient use of computational resources during training and enables the model to generate higher-quality images. The introduction of flow matching, a technique for training Continuous Normalizing Flows (CNFs), further contributes to the model's improved performance by facilitating faster training, more efficient sampling, and better overall results.

Expanded Model Range

To cater to a wide range of user needs, Stable Diffusion 3 offers models with varying sizes, ranging from 800 million to 8 billion parameters. This scalability ensures that users can choose a model that best fits their requirements, whether they prioritize image quality or computational efficiency.

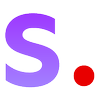

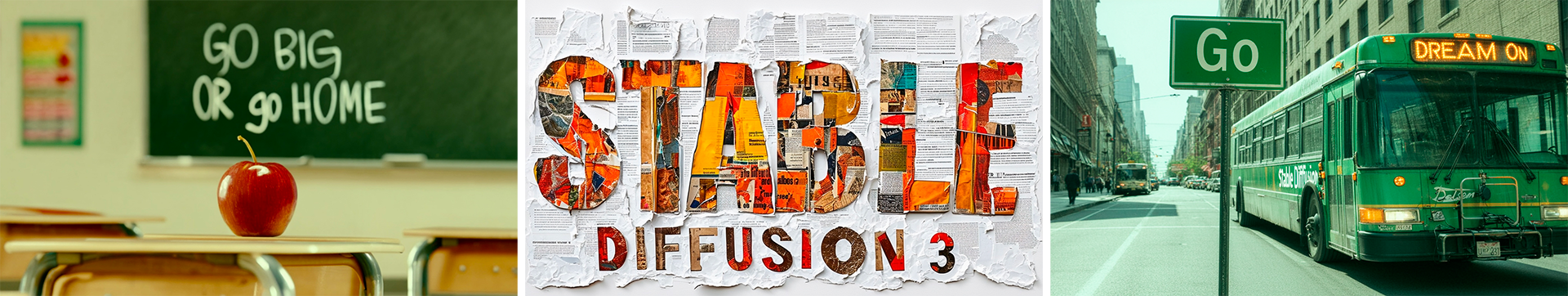

Improved Multi-Subject Prompt Handling and Typography

One of the standout improvements in Stable Diffusion 3 is its enhanced ability to handle multi-subject prompts, allowing for the generation of images that accurately represent complex scenes with multiple subjects. Additionally, the model boasts significantly better typography capabilities, addressing a previous weakness by enabling more accurate and consistent text representation within generated images.

Safety and Accessibility

Stability AI emphasizes safe and responsible AI practices, implementing numerous safeguards to prevent misuse of Stable Diffusion 3 by bad actors. The company's commitment to democratizing access to generative AI technologies is evident in its decision to offer a variety of model options and to eventually make the model's weights freely available for download and local use.

Future Directions

While Stable Diffusion 3 initially focuses on text-to-image generation, its underlying architecture lays the groundwork for future expansions into 3D image generation and video generation. This versatility underscores Stability AI's ambition to develop a comprehensive suite of generative models that can cater to a broad spectrum of creative and commercial applications.

Conclusion

Stable Diffusion 3 is a major advancement in text-to-image AI. It delivers superior image quality, versatility, and innovative features. As Stability AI further develops the model, Stable Diffusion 3 promises to revolutionize creative expression across multiple fields.